Benchmark area:

Face Image ISO Compliance Verification

|

This benchmark area contains face image ISO compliance verification benchmarks. Algorithms submitted to these benchmarks are required to check the compliance of face images to ISO standard.

|

Benchmarks

Currently, this benchmark area contains the following benchmarks:

- FICV-TEST: A simple dataset useful to test

algorithm compliancy with the testing protocol (results obtained on this

benchmark are only visible in the participant private area and cannot be

published).

- FICV-1.0: A large dataset of high-resolution

face images related to all the requirements specified in Table I. This benchmark

is described in detail in [1].

The table below reports the main characteristics of each benchmark:

|

Benchmark |

Minimum Image Size |

Maximum Image Size |

Number of Images |

|

FICV-TEST |

762x567 |

2272x1704 |

720 |

|

FICV-1.0 |

762x564 |

2272x1704 |

4868 |

Information about reproducing the FICV-TEST dataset are

available in the download page.

The following sections report the list of requirements, the testing protocol and the performance

indicators common to all benchmarks in this area.

Requirements

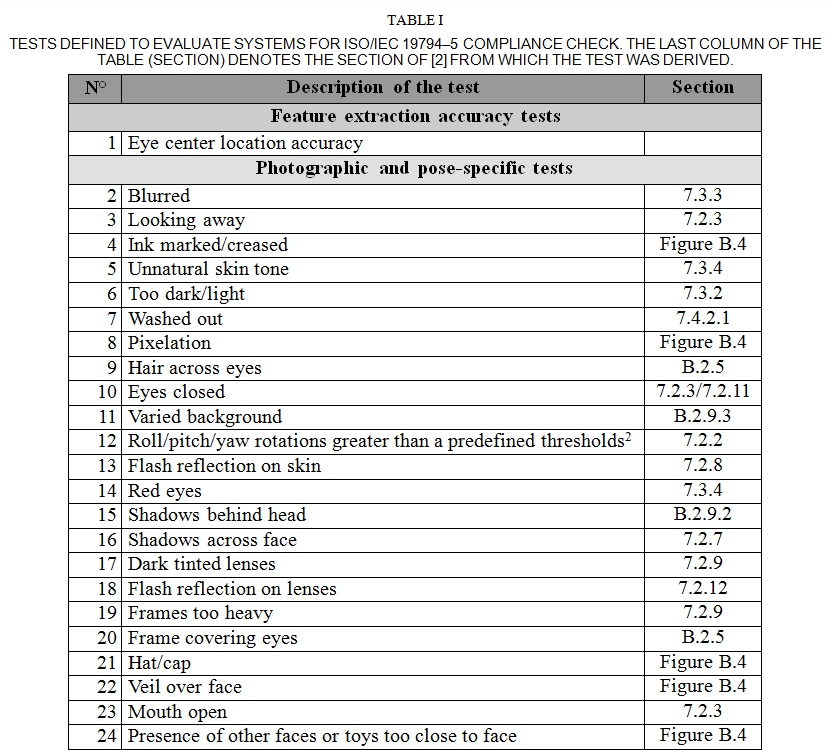

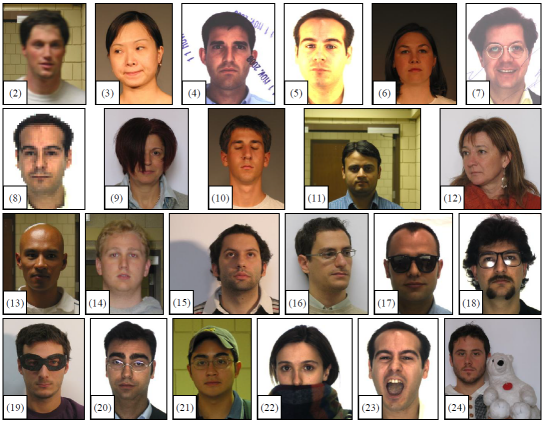

Starting from the indications provided into the ISO standard [2], a set of 24 tests has been defined to evaluate the accuracy of automatic systems for compliance verification (see Table I).

The tests can be organized into two categories:

- Feature extraction accuracy: this test evaluates the accuracy of eye centers detection1;

- Photographic and pose-specific tests: the face must be clearly visible and recognizable; this requirement implies several constraints that generate most of the uncertainties in the interpretation of the ISO standard. A precise formalization is here proposed to limit as much as possible the ambiguity

(see Fig. 1).

Fig. 1 – An example of non-compliant image for the requirements 2..24 listed in Table I: the labels indicate the number of the related requirement. The images are here cropped and zoomed to better show the details of the non-compliant requirements.

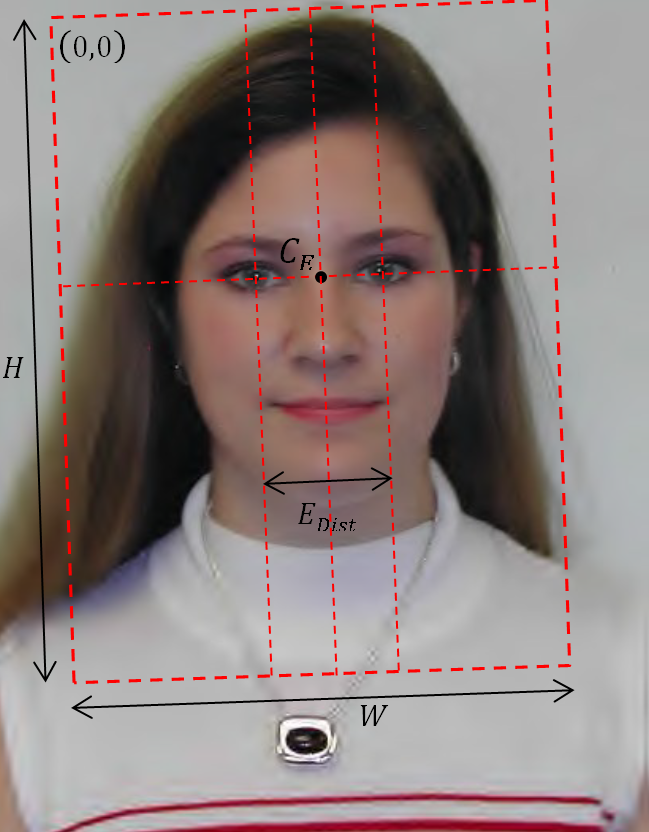

Moreover the possibility of transforming the image in Token Format (see section 9.2.3 in [2]) is evaluated on the basis of the eye positions1. An image is

tokenizable (without padding) if:

- the distance (EDist) between eyes is at least 60 pixels;

- the rectangular region of size W×H (with W=4·EDist and H=W·4/3), determined so that the eyes are horizontally aligned and their center is in position CE=(W·1/2,W·3/5), is totally enclosed in the original image (see Fig. 2).

Fig. 2 – Geometric characteristics of the token image format.

1 The eye center points are defined to be the horizontal and vertical midpoints of the eye corners (see section 5.6.5 in [2]).

2 ±5 degrees for rotations in pitch and yaw, and ±8 degrees for rotation in roll (see section 7.2.2 in [2]).

Protocol

Each participant is required to submit, for each algorithm,

one executable named Check.exe

in the form of Win32 console applications.

The executable will take the input from command-line arguments and will

append the output to a text file.

- Each line of the outputfile must contain the following fields separated by a blank space character:

- ImageName is the image file name (e.g., image1.png).

- RetVal is an integer value indicating if the input image can be processed or not by the executable3:

- 1 if the image can be processed.

- 0 if the image cannot be processed and no more information is available.

- -1 if the image cannot be processed due to unsupported image size.

- -2 if the image cannot be processed due to unsupported image format.

- -3 if the image cannot be processed due to unuseful image content.

- LE_x is an integer value indicating the X coordinate (in pixels) of the left eye center4.

- LE_y is an integer value indicating the Y coordinate (in pixels) of the left eye center4.

- RE_x is an integer value indicating the X coordinate (in pixels) of the right eye center4.

- RE_y is an integer value indicating the Y coordinate (in pixels) of the right eye center4.

- Test_2 is an integer value in the range [0;100] indicating the compliance degree of the input image with respect to the Compliance Test 2. 0 means no compliancy, 100 maximum compliancy. Moreover, the following special characters have been defined:

- ‘-‘ if the executable is not able to evaluate this requirement.

- ‘?’ if, usually, the executable is able to evaluate this requirement but it was not able to evaluate it on the current input image for an identified failure (e.g., the SDK is not able to evaluate the presence of red eyes since the eyes are closed).

- ‘!’ if, usually, the executable is able to evaluate this requirement but it was not able to evaluate it on the current input image for an unknown failure.

- Test_3 analogous to test 2 but related to test 3.

- ...

- Test_24 analogous to test 2 but related to test 24.

- C, C# and Matlab language skeletons for check.exe are available in the

download page to reduce the participants

implementation efforts.

- The executable has write permission only on the output file. All configuration files should be opened in read mode.

3 If the input image cannot be processed (i.e., RetVal≠1) the other fields must be empty.

4 The origin of the coordinate system shall be the upper left corner of the input image; the X and Y values start from 1 (and not from 0).

Constraints

During test execution the following constraints will be enforced:

|

Benchmark |

Maximum time for each check |

Memory allocation limit for check processes |

|

FICV-TEST |

30 seconds |

No limit |

|

FICV-1.0 |

10 seconds |

No limit |

Each check attempt that violates one of the above constraints

results in a failure.

The following time breaks are enforced between two consecutive submissions to the

same benchmark by the same participant.

|

Benchmark |

Minimum break |

|

FICV-TEST |

12 hour(s)

|

|

FICV-1.0 |

15 day(s)

|

Performance Evaluation

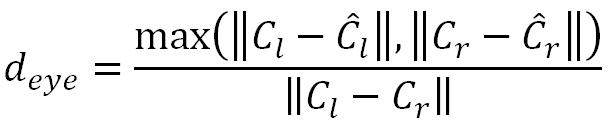

For each image, the compliance to the requirements listed in Table I is

evaluated using the submitted algorithm. To evaluate the accuracy of eye

localization the relative error measure based on the distances between the

expected and the estimated eye positions introduced in [3] is calculated as:

where Cl/r and Ĉl/r are the ground truth and the positions returned by the

algorithm, respectively. This measure is scale independent and therefore it

permits to compare data sets characterized by different resolution.

general performance indicators

Moreover, for each Photographic and pose-specific test (see Table I) some

accuracy indicators are calculated. In order to perform balanced tests, the

dataset has been further divided into 23 subsets, each related to a specific

requirement, containing the same number of compliant and non-compliant images.

It is worth noting that each subset is related to a specific characteristic and

that consequently only the algorithm response for that characteristic is

considered for indicators calculation.

performance indicators

- EER

- Rejection (percentage of images where the requirement was not evaluated)

- Impostor

Genuine

- FMR(t)/FNMR(t)

- DET(t)

Note that, according to the best practices, rejections are here implicitly

included in the calculation of the performance

indicators by assuming that a degree of compliance

equal to 0 (for the given requirement) is returned in case of rejection. This

choice is aimed at discouraging the algorithm to reject the most uncertain cases

thus improving the performance over processed images.

Terms and Conditions

All publications and works that cite FICV Benchmark Area must reference [1].

Bibliography

[1] M. Ferrara, A. Franco, D. Maio and D. Maltoni, "Face Image Conformance to ISO/ICAO standards in Machine Readable Travel Documents", IEEE Transactions on Information Forensics and Security, vol.7, no.4, pp.1204-1213, August 2012.

[2] ISO/IEC 19794-5, Information technology - Biometric data interchange formats

- Part 5: Face image data, 2011.

[3] O. Jesorsky, K. J. Kirchberg, and R. W. Frischholz, "Robust face detection

using the hausdorff distance", Lecture Notes in Computer Science 2091, pp.90-95,

2001.

|